Artificial intelligence is no longer a future consideration for university leadership. It's an operational reality reshaping budgets, workforce planning, and daily campus functions. Yet most institutions remain underprepared. A 2024 survey from Inside Higher Ed found only 9% of higher education CTOs believed their institutions were ready for AI's rise.

Meanwhile, students have moved ahead. Research from HEPI shows AI usage among undergraduates jumped from 66% in 2024 to 92% in 2025. They expect AI literacy as part of their education. They expect AI-powered services. And they will compare your institution to competitors already delivering both.

The AI trends outlined here carry direct implications for how universities allocate resources, manage personnel, run operations, handle compliance, and plan for the next five years. Ignoring them carries measurable costs. Acting on them requires informed decisions.

Here are the five trends that demand attention.

Trend 1: Budget Reallocation and AI Investment Pressures

Universities are spending more on AI. The question is whether that spending is strategic or reactive.

Most institutions have not created dedicated AI budget lines. Instead, AI costs get absorbed into existing categories: IT infrastructure, software licensing, compliance, professional development. This makes tracking actual AI expenditure difficult and strategic planning nearly impossible. A survey from Constellation Research found 79% of executives are increasing AI budgets, with 32% expecting increases of 50% or more. But few organizations have clear visibility into where that money goes.

The biggest expenses fall into predictable categories. Enterprise software vendors like Microsoft, Google, and Adobe now bundle AI features into existing products and raise subscription prices accordingly. Infrastructure costs include computing power, data storage, and security upgrades. Training expenses cover professional development for faculty and staff. Vendor contracts for specialized AI tools add another layer.

As reported by Higher Ed Dive, Stony Brook University recently committed $15 million to a universitywide AI initiative. That figure represents serious institutional commitment, but as their provost noted, it's both substantial and modest given AI's scope.

Does AI reduce costs? Sometimes. Georgia State University's Pounce chatbot handles hundreds of thousands of student inquiries annually, freeing staff for higher-priority work. Arizona State's Hey Sunny provides similar 24/7 support. An analysis featured in eCampus News projected UC Berkeley could save $5-10 million annually through automated administrative inquiries and another $5-8 million through AI-driven energy optimization.

But upfront implementation costs often exceed short-term savings. ROI is a multi-year calculation requiring patience most budget cycles don't accommodate. AI is not cheaper than traditional systems in year one. It may become cheaper by year three or four if implementation goes well.

The practical takeaway: Build AI into existing budget frameworks now. Track spending across departments. Set realistic timelines for ROI. And stop treating AI as a one-time purchase rather than ongoing operational infrastructure.

Trend 2: Workforce Transformation and Staffing Shifts

AI will not eliminate university jobs overnight. But it is already changing what those jobs require and which roles remain necessary.

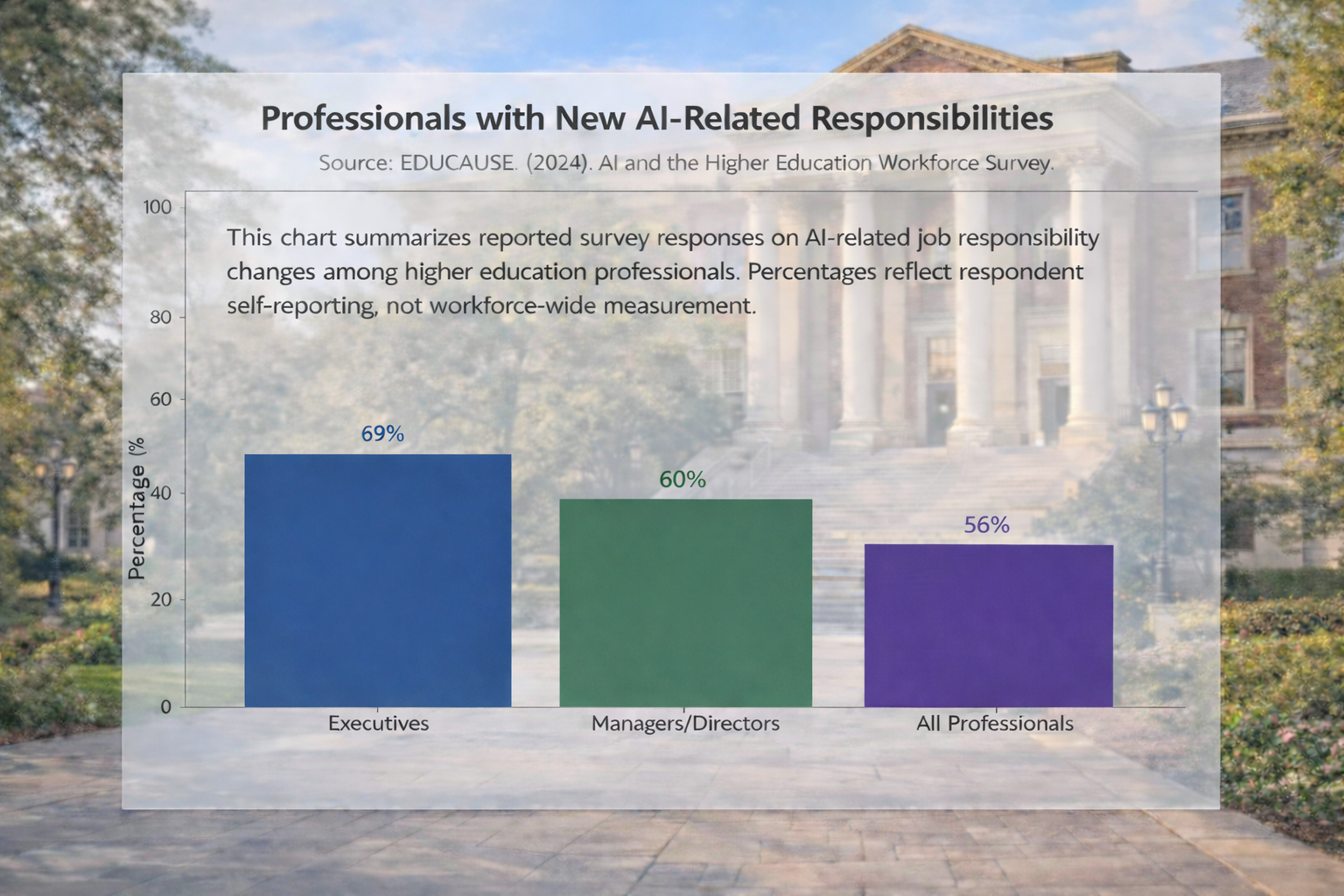

A survey from Educause found 56% of higher education professionals now have new responsibilities related to AI strategy. Executives lead the shift at 69%, followed by managers and directors at 66%. Staff and faculty are catching up, often without corresponding adjustments to workload or compensation.

The positions facing highest risk involve routine, rules-based tasks. Administrative assistants handling scheduling and data entry. Admissions processors conducting initial application screening. Student services staff answering frequently asked questions. Finance personnel managing invoices and basic reporting. Research from McKinsey estimates 30-50% of current administrative tasks are automatable with existing tools.

Marketing and communications departments face particular pressure. AI can generate first drafts of recruitment emails, social media content, and basic copy faster than human writers. The role shifts from content creation to content oversight and strategy.

Universities are hiring differently in response. AI specialists, data scientists, and machine learning engineers are in demand. So are AI governance coordinators, ethics officers, and training staff who can help existing employees adapt. Human roles are shifting toward relationship-building, complex problem-solving, and the judgment calls AI cannot make.

Faculty workload is a growing concern. AI adds responsibilities without reducing existing duties. A report from the AAUP found 76% of surveyed academic professionals said AI implementation worsened their job enthusiasm. Faculty must learn new tools, redesign assessments to account for student AI use, and integrate AI literacy into curriculum. Professional development requirements expand without compensatory time or pay.

The workforce question for leadership is not whether AI will replace staff. It's which tasks shift to machines, which roles evolve, and how you support employees through the transition. Wholesale layoffs create institutional knowledge loss and morale damage. Strategic reskilling preserves talent while capturing efficiency gains.

Trend 3: Operational Automation Across the Student Lifecycle

AI is automating university processes from recruitment through graduation. The institutions implementing these tools report measurable efficiency gains. Those without them face growing competitive disadvantage.

Admissions and Enrollment

Chatbots now handle 24/7 prospective student inquiries. According to Copilot.live, Temple University reduced call volume by 50% after implementing Ivy.ai. These tools answer questions about application requirements, deadlines, financial aid, and campus life without human intervention.

Predictive analytics forecast which applicants are likely to enroll, allowing targeted outreach. AI can screen applications for basic qualification criteria, flagging incomplete submissions and routing qualified candidates for human review. Scheduling automation reduces staff time on interview and campus tour coordination by an estimated 40%.

Advising and Student Support

Data from Inside Higher Ed shows 15% of colleges now use AI for student advising, with another 26% using predictive analytics to identify students at risk of dropping out. Early warning systems flag students showing signs of academic struggle based on attendance, assignment completion, and engagement patterns.

As highlighted by HEM, the University of Toronto is piloting course-specific AI tutors that answer student questions about class materials around the clock. Unlike public chatbots, these run on secure platforms with access limited to course content, protecting both intellectual property and student privacy.

AI advisors handle routine questions, freeing human advisors for complex conversations about career planning, personal challenges, and academic difficulties requiring judgment and empathy.

Scheduling and Resource Allocation

AI optimizes class schedules based on student demand, faculty availability, and classroom capacity. This reduces scheduling conflicts and underutilized space. Enrollment forecasting improves resource allocation across departments.

Financial Aid and Business Operations

AI speeds approval workflows and flags inconsistencies in financial aid applications. Automated document processing reduces manual data entry. Invoice processing and payment automation are gaining traction in business offices.

The efficiency numbers are significant. Research from KPMG found AI can reduce basic procurement and administrative task time by up to 80%. Survey data from Ellucian shows over 80% of higher education leaders expect significant AI growth in student success and enrollment management applications.

These tools improve efficiency. They also raise student expectations. Once prospective students experience instant responses and personalized communication from one institution, they notice when others fall short.

Trend 4: Risk Management, Data Privacy, and Vendor Oversight

Every operational benefit from AI carries corresponding risk. Universities handling student data face compliance obligations that AI can either support or violate depending on implementation.

Data Privacy and FERPA

The Family Educational Rights and Privacy Act applies to AI tools processing student education records. This creates specific obligations. Student consent may be required before using their data with AI applications. Data minimization principles apply: use only the information necessary for the AI function, as outlined in guidance from NMU. Third-party AI vendors must comply with FERPA requirements and maintain appropriate data protection measures.

AI systems can "hallucinate," generating false or misleading information. When that involves student records, the consequences extend beyond embarrassment to potential compliance violations. Institutions need clear policies on AI use with student data and regular audits of how AI tools handle sensitive information.

Regulatory Landscape

FERPA remains the primary federal framework for student data. The FTC finalized COPPA amendments in January 2025 tightening rules on children's online data. GDPR applies to any EU student data, requiring explicit consent and transparency about data use.

No comprehensive federal AI regulation exists for higher education. This means institutions must self-govern, developing policies that may exceed current legal requirements but protect against future regulatory action.

Vendor Management

AI procurement exposes gaps in how universities evaluate and manage technology vendors. Analysis from Educause Review found poor coordination between IT and procurement offices at many institutions. The volume of cloud-based AI tools now exceeds review capacity at most schools. Faculty and staff can download and deploy AI applications without institutional oversight.

Smaller institutions face particular challenges. Vendors prioritize large contracts with major universities and elite institutions seeking to burnish product reputations. Smaller schools may struggle to engage solution providers or negotiate affordable enterprise pricing.

Due diligence requirements for AI vendors include confirming FERPA compliance, reviewing data storage and encryption practices, establishing contractual limits on data use, and requiring transparency about how AI models make decisions.

Operational Risks

A survey from UNESCO found one in four universities has already encountered ethical issues linked to AI, from student overreliance to authorship disputes and algorithmic bias. Bias in AI-driven admissions, advising, or financial aid decisions creates legal exposure and reputational damage. Over-automation reduces human oversight and the nuanced judgment that complex student situations require.

Risk management is not a reason to avoid AI. It's a reason to implement AI thoughtfully with appropriate governance structures.

Trend 5: Strategic Planning and the Cost of Inaction

Universities adopt AI at different speeds. Those without strategic plans will find themselves reacting to crises rather than capturing opportunities.

Current Adoption Pace

Research from UNESCO shows 19% of institutions have formal AI policies, with another 42% developing guidance. That leaves roughly 40% without clear direction.

Faculty adoption shows similar patterns. Data from the Digital Education Council indicates 61% have used AI in teaching, but 88% report only minimal to moderate integration. The gap between institutional AI initiatives and actual classroom practice remains wide.

Regional variation is significant. Approximately 70% of European and North American institutions have or are developing AI frameworks. In Latin America and the Caribbean, that figure drops to 45%.

Consequences of Inaction

Institutions without AI strategies face predictable problems. Competitive disadvantage in recruitment as peer institutions offer AI-enhanced services. Staff burnout from ad hoc responses to AI disruptions. Potential compliance violations from uncoordinated tool adoption. Students graduating unprepared for AI-driven workplaces.

Analysis from Inside Higher Ed predicts major operational changes by 2027-28 for institutions slow to adapt. The timeline compresses as AI capabilities accelerate. Two years of careful planning is reasonable. Five years of delay may be unrecoverable.

Planning Essentials

Effective AI strategy requires cross-functional governance. IT, legal, academic affairs, and procurement must coordinate rather than operate independently. AI use policies should cover teaching, research, and administration with clear guidance on acceptable applications and prohibited uses.

Budget allocation for training across all employee levels is not optional. Faculty need support redesigning courses. Staff need support adapting workflows. Leadership needs information to make informed decisions.

Evaluation frameworks should precede AI purchases, not follow them. What problem does this tool solve? What data does it require? Who is the vendor and what are their compliance practices? What happens if the tool fails or the vendor relationship ends?

AI literacy belongs in curriculum across programs, not just computer science. Students in every field will encounter AI in their careers. Preparing them is a core educational obligation.

The 2030 Outlook

AI is becoming critical infrastructure for university operations. By 2030, agentic AI systems handling multi-step processes autonomously will move from pilot programs to standard practice. Human-AI collaboration will be the operational norm, not an experimental approach.

Institutions that build governance frameworks now will lead. Those that delay will scramble to catch up while managing the consequences of uncontrolled AI proliferation across their campuses.

Conclusion

These five trends connect. Budget decisions affect workforce planning. Workforce changes affect operational capacity. Operations generate data that creates compliance risk. Risk management requires strategic planning. Planning requires budget allocation.

University leadership cannot address AI in silos. The provost, CFO, CIO, and general counsel need shared understanding and coordinated action. Governance structures must cross traditional administrative boundaries.

AI adoption is not an experiment you can defer. It's an operational shift already underway. Your students use AI daily. Your competitors implement AI monthly. Your vendors build AI into every product update.

The question is not whether AI will change your institution. It's whether you shape that change or react to it. The institutions that act strategically in 2026 will define higher education's AI-enabled future. The rest will follow their lead or fall behind.

Start now. Assign ownership. Allocate resources. Build the governance structures that let you move confidently rather than cautiously. The cost of waiting exceeds the cost of acting.

How to Pay for College in 2026: Costs, Aid, and Smart Planning

How to Pay for College in 2026: Costs, Aid, and Smart Planning

The Role of Robotics in Developing Future-Ready College Students

The Role of Robotics in Developing Future-Ready College Students

Undergraduate Research: How Students Join Labs Across Campus

Undergraduate Research: How Students Join Labs Across Campus

Using AI to Succeed in College Science Courses

Using AI to Succeed in College Science Courses

What Steps Should You Take to Become a University Tutor?

What Steps Should You Take to Become a University Tutor?